RE:IMAGE exhibition at Articulate Project Space

RE:IMAGE exhibition at Articulate Project Space

01-23 November 2025

artists:

Aidan Gageler, Alice Crawford, Andrew Simms, Angharad Evans, Annelies Jahn, John Prendergast, Julie Visible, Kath O'Donnell, Kathryn Bird, Kendal Heyes, Keorattana Luangrathrajasombat, Mark Facchin, Neil Jenkins, Pia Larsen, Samuel James, Steven Fasan, Sue Murray, Zorica Purlija

Curated by Anke Stäcker and Beata Geyer

:::

I'm gallery sitting this Friday 11am-2pm, 14 Nov if you'd like to pop in, otherwise visit the exhibition Friday-Sundays weekly until 23 Nov, 11am-5pm

Artist talks this Sunday, 16 November, 2-4pm

:::

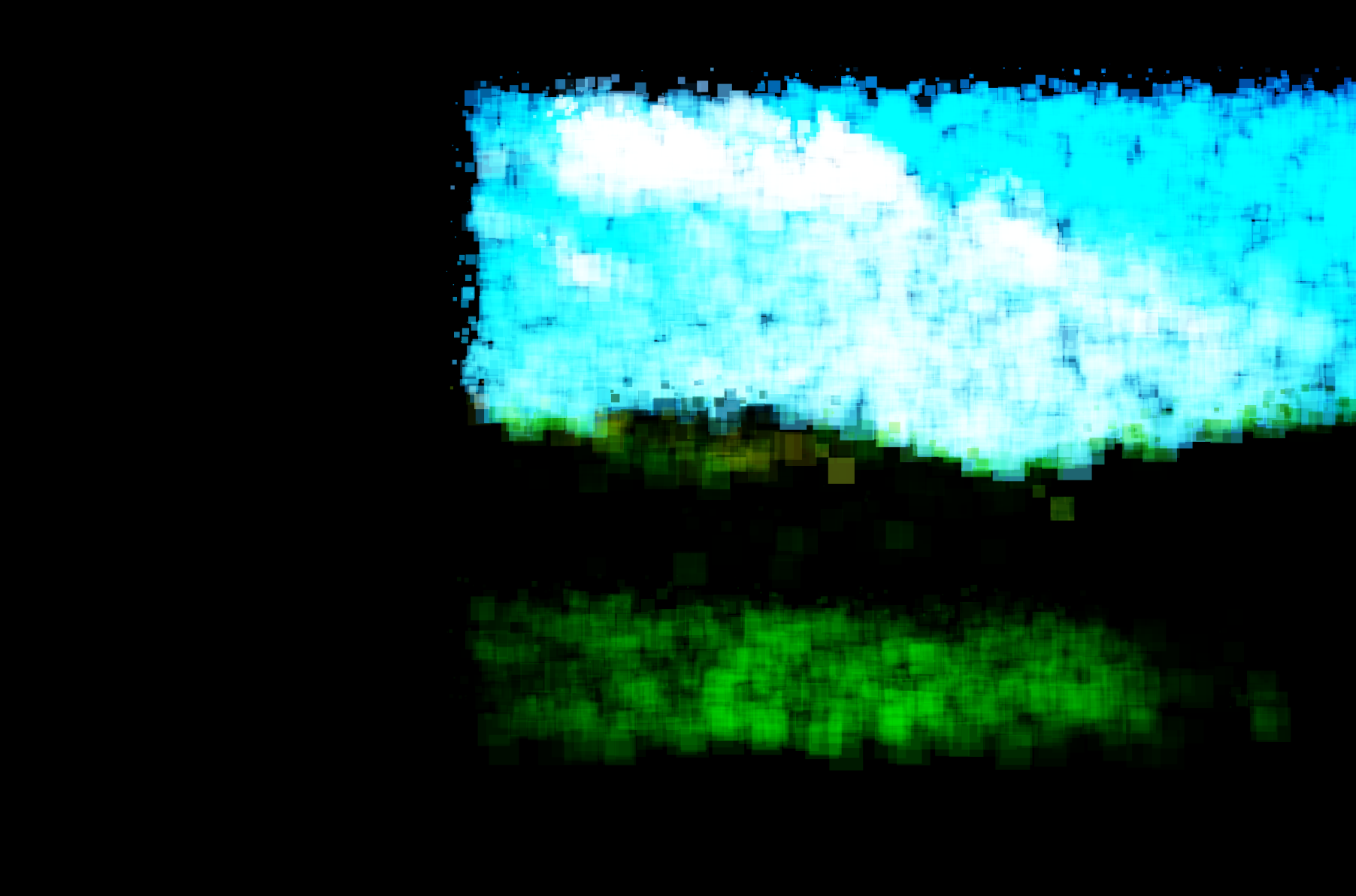

my work is called "remuxed" ::: memories outsourced to photographs, then dynamically remuxed / reconstituted by this hand coded drawing program. it's a more personal topic / inquiry than my usual extinction / ecology related works. I don't see images in my mind - it's called aphantasia as I understand it. I do see fragments (and conversations) when I dream, which are quickly lost upon waking. so this drawing is a simple attempt at investigating what my brain can't do. when I travelled for work, I'd take photographs, there's thousands of them on my flickr account. I'd collect them to use 'one day' — that 'day' has finally come. I wanted to make a drawing which would select a random photo or few from my flickr account, then reconstitute them, like memories that my mind eye should be but cannot see. these memories were outsourced to the photographs, I can see them on the screen. it's a bit like the section of the Wim Wenders film, "Until the End of the World" where they create and test a machine to see their dreams. it's a very seductive idea, being able to see what you cannot, to find a way to see what my brain can't.

I made the drawing for the RE:IMAGE exhibition, I created it on a short deadline. there were some parts I dropped to leave for future versions, such as collecting multiple images dynamically. this version has one image included in the drawing program — I did initially have three images but it was becoming too 'busy' visually. which does match the idea, but for this initial version I decided to keep it simple. I've since worked out how to collect multiple images dynamically so will try that for a future version. I may need to find another platform to host it on as usually these network calls are blocked, so one limitation is that I need to contain all the components of the drawing within the drawing itself. the title reflects parts of my previous career. we had equipment called a mux, a multiplexer which combines multiple components and outputs a transport stream, ie a combined digital object. if we wanted to translate or change or remap a value we would often remux it, and change the output then pass it through to another mux or the transmission system. so I thought this term / idea was a useful analogy to using the drawing to remux this part then pass it through to the mux / my brain. not only outsource the memory to a photograph (tech), but also outsource visually recalling this memory to the drawing code (tech).

it also reminded me of the simplified arguments on how AI uses/recreates images — my code / drawing doesn't use AI, it uses a simple algorithm that I wrote with some snippets of collaged code fragments of examples from documentation as well as choices and logic I've added myself. so the implementation is far different to how the AI do it these days, but it reminded me of the approach mentioned in the arguments for & against it (whether they are technically correct or not!)

:::

it is a code-based drawing where the software code is drawing the photograph, much like a person might draw from a reference photo, translating the colours from the source image to the canvas. in this version, there's 'noise' / errors introduced as the colour is drawn via a small random offset to the original position, which gives a softer, more painterly effect and lets the software have some imperfections like a human artist might. the source photo becomes reference for this new pixelized image / drawing, recreated in random fragments

:::

it's a dynamic javascript code-based drawing running live off the blockchain and IPFS (public cloud server). each time the drawing page is reloaded, a new version is drawn/created (same image, different positions), so there's infinite versions of the painting/drawing. the drawing is displayed in the web browser to reflect the networked performance of the act of drawing -> the machine mirroring the human processes

it is hosted on the blockchain and IPFS (a public cloud / file server) though this drawing doesn't specifically use the blockchain metadata to control aspects of the drawing like many of my other code based drawings do. a key feature is still randomness though

:::

direct link/IPFS - refresh the page to see new version, press s to save current image to your computer:

RE:IMAGE exhibition information: https://www.articulateprojectspace.org/project/25-11-D

:::

this post is also found at:

https://aliak.substack.com/p/reimage-exhibition-at-articulate

https://www.instagram.com/p/DQ-26BVj0aj

::: category:

::: location:

- AliaK's blog

- 443 reads